Using TensorFlow Object Detection API

Train a Deep Learning model for custom object detection using TensorFlow 1.x in Google Colab and convert it to a TFLite model for deploying on mobile devices like Android, iOS, Raspberry Pi, IoT devices using the sample TFLite object detection app from TensorFlow’s GitHub.

Roadmap

- Collect the dataset of images and label them to get their XML files.

- Install the TensorFlow Object Detection API.

- Generate the TFRecord files required for training. (need generate_tfrecord.py script and CSV files for this)

- Edit the model pipeline config file and download the pre-trained model checkpoint.

- Train and evaluate the model.

- Export and convert the model into TFlite(TensorFlow Lite) format.

- Deploy the TFlite model on Android / iOS / IoT devices using the sample TFLite object detection app from TensorFlow’s GitHub..

In this article, I will be training a model for a custom dataset for mask detection and converting it to a TFlite model so it can be deployed on Android, iOS, IoT devices. This will be done in 21 steps mentioned in the contents section below. (The first 17 steps are the same as my previous article on training a Deep Learning model using TF1. Since TFLite has support for only SSD models at the moment, we will be using an SSD model here)

( But first ✅Subscribe to my YouTube channel 👉🏻 https://bit.ly/3Ap3sdi 😁😜)

- Install TensorFlow 1.x

- Import dependencies

- Create customTF1, training, and data folders in your google drive

- Create and upload your image files and XML files

- Upload the generate_tfrecord.py file to the customTF1 folder in your drive

- Mount drive and link your folder

- Clone the TensorFlow models git repository & Install TensorFlow Object Detection API

- Test the model builder

- Navigate to the data folder on your drive and unzip the images.zip and annotations.zip files into the data folder

- Create test_labels & train_labels

- Create_CSV and “label_map.pbtxt” files

- Create ‘train.record’ & ‘test.record’ files

- Download pre-trained model checkpoint

- Get the model pipeline config file, make changes to it, and put it inside the data folder

- Load Tensorboard

- Train the model

- Test your trained model

- Export SSD TFlite graph

- Convert TFlite graph to TFlite model

- Create TFLite with metadata

- Download TFLite model with metadata and deploy on Android device

HOW TO BEGIN?

- Open my Colab notebook on your browser.

- Click on File in the menu bar and click on Save a copy in drive. This will open a copy of my Colab notebook on your browser which you can now use.

- Next, once you have opened the copy of my notebook and are connected to the Google Colab VM, click on Runtime in the menu bar and click on Change runtime type. Select GPU and click on save.

LET’S BEGIN !

1) Install TensorFlow 1.x

#install tensorflow 1.15

!pip install tensorflow==1.15

#Check tensorflow version

import tensorflow as tf

print(tf.__version__)2) Import dependencies

import os

import glob

import xml.etree.ElementTree as ET

import pandas as pd3) Create customTF1, training, and data folders in your google drive

Create a folder named customTF1 in your google drive.

Create another folder named training inside the customTF1 folder ( training folder is where the checkpoints will be saved during training ).

Create another folder named data inside the customTF1 folder.

4) Create and upload your image files and XML files.

Create a folder named images for your custom dataset images and create another folder named annotations for its corresponding XML files.

Next, create their zip files and upload them to the customTF1 folder in your drive.

NOTE: Make sure all the image files have their extension as “.jpg” only. Other formats like “.png “, “.jpeg” or even “.JPG” will give errors since the generate_tfrecord and xml_to_csv scripts here have only “.jpg” in them. If you have different format images, then make changes in the scripts accordingly.

For datasets, you can check out my Dataset Sources at the bottom of this article in the credits section.

Collecting Images Dataset and labeling them to get their PASCAL_VOC XML annotations.

Labeling your Dataset

Input image example (Image1.jpg)

You can use any software for labeling like the labelImg tool.

I use an open-source labeling tool called OpenLabeling with a very simple UI.

Click on the link below to know more about the labeling process and other software for it:

NOTE : Garbage In = Garbage Out. Choosing and labeling images is the most important part. Try to find good quality images. The quality of the data goes a long way towards determining the quality of the result.

The output PASCAL_VOC labeled XML file looks like as shown below:

5) Upload the generate_tfrecord.py file to the customTF1 folder in your drive.

You can find the generate_tfrecord.py file here

6) Mount drive and link your folder

#mount drive

from google.colab import drive

drive.mount('/content/gdrive')

# this creates a symbolic link so that now the path /content/gdrive/My Drive/ is equal to /mydrive

!ln -s /content/gdrive/My Drive/ /mydrive

#list the contents in the drive

!ls /mydrive7) Clone the TensorFlow models git repository & Install TensorFlow Object Detection API

Clone the TensorFlow models’ repository in the Colab VM

!git clone --q https://github.com/tensorflow/models.gitInstall TensorFlow Object Detection API as instructed on the official TensorFlow Documentation page here

#navigate to /models/research folder to compile protos

%cd models/research

# Compile protos.

!protoc object_detection/protos/*.proto --python_out=.

# Install TensorFlow Object Detection API.

!cp object_detection/packages/tf1/setup.py .

!python -m pip install .

#Dont have to use the --use-feature=2020-resolver as it is already the deafault now8) Test the model builder

!python object_detection/builders/model_builder_tf1_test.py9) Navigate to the data folder on your drive and unzip the images.zip and annotations.zip files into the data folder

Navigate to /mydrive/customTF1/data/

%cd /mydrive/customTF1/data/Unzip the images.zip and annotations.zip files into the data folder

# unzip the datasets and their contents so that they are now in /mydrive/customTF1/data/ folder

!unzip /mydrive/customTF1/images.zip -d .

!unzip /mydrive/customTF1/annotations.zip -d .10) Create test_labels & train_labels

Current working directory is /mydrive/customTF1/data/

Divide annotations into test_labels(20%) and train_labels(80%).

11) Create the CSV files and the “label_map.pbtxt” file

Current working directory is /mydrive/customTF1/data/

Run xml_to_csv script below to create test_labels.csv and train_labels.csv

This script also creates the label_map.pbtxt file using the classes mentioned in the XML files.

The 3 files that are created i.e. train_labels.csv, test_labels.csv, and label_map.pbtxt look like as shown below:

The train_labels.csv contains the name of all the train images, the classes in those images, and their annotations.

The test_labels.csv contains the name of all the test images, the classes in those images, and their annotations.

The label_map.pbtxt file contains the names of the classes from your labeled XML files.

NOTE: I have 2 classes i.e. “with_mask” and “without_mask”.

Label map id 0 is reserved for the background label.

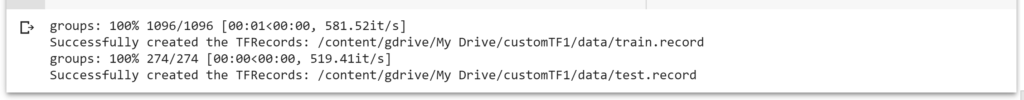

12) Create train.record & test.record files

Current working directory is /mydrive/customTF1/data/

Run the generate_tfrecord.py script to create train.record and test.record files.

#Usage:

#!python generate_tfrecord.py output.csv output_pb.txt /path/to/images output.tfrecords

#FOR train.record

!python /mydrive/customTF1/generate_tfrecord.py train_labels.csv label_map.pbtxt images/ train.record

#FOR test.record

!python /mydrive/customTF1/generate_tfrecord.py test_labels.csv label_map.pbtxt images/ test.recordIf everything goes well, you will see the following output :

The total number of image files is 1370. Since we divided the labels into two categories viz. train_labels(80%) and test_labels(20%), the number of files for “train.record” is 1096, and the number of files for “test.record” is 274.

13) Download pre-trained model checkpoint

Current working directory is /mydrive/customTF1/data/

You can choose any model for training depending upon your data and requirement. Read this blog for more info on which. The official list of detection checkpoints for TensorFlow 1.x can be found here.

However, since TFLite doesn’t support all models right now, the options for this are limited at the moment. TensorFlow is working towards adding more models with TFLite support. Read more about TFLite compatible models for all ML modules like object detection, image classification, image segmentation, etc here.

Currently, TFLite supports only SSD models (excluding EfficientDet)

In this tutorial, I will use the ssd_mobilenet_v2_coco_2018_03_29.tar.gz model.

#Download the pre-trained model ssd_mobilenet_v2_coco_2018_03_29.tar.gz into the data folder & unzip it

!wget http://download.tensorflow.org/models/object_detection/ssd_mobilenet_v2_coco_2018_03_29.tar.gz

!tar -xzvf ssd_mobilenet_v2_coco_2018_03_29.tar.gz14) Get the model pipeline config file, make changes to it and put it inside the data folder

Download ssd_mobilenet_v2_coco.config from /content/models/research/object_detection/samples/configs/ folder. Make the required changes to it and upload it to the /mydrive/customTF1/data folder.

OR

Edit the config file from /content/models/research/object_detection/samples/configs/ in Colab VM and copy the edited config file to the /mydrive/customTF1/data folder.

You can also find the pipeline config file inside the model checkpoint folder we just downloaded in the previous step.

You need to make the following changes:

- change num_classes to the number of your classes.

- change test.record path, train.record path & labelmap path to the paths where you have created these files (paths should be relative to your current working directory while training).

- change fine_tune_checkpoint to the path where the downloaded checkpoint from step 13 is.

- change fine_tune_checkpoint_type with value classification or detection depending on the type.

- change batch_size to any multiple of 8 depending upon the capability of your GPU. (eg:- 24,128,…,512). The better the GPU capability, the higher you can go. Mine is set to 64. Mine is set to 24.

- change num_steps to the number of steps you want the detector to train.

Max batch size= available GPU memory bytes / 4 / (size of tensors + trainable parameters)

Next, copy the edited config file.

# copy the edited config file from the samples/configs/ directory to the data/ folder in your drive

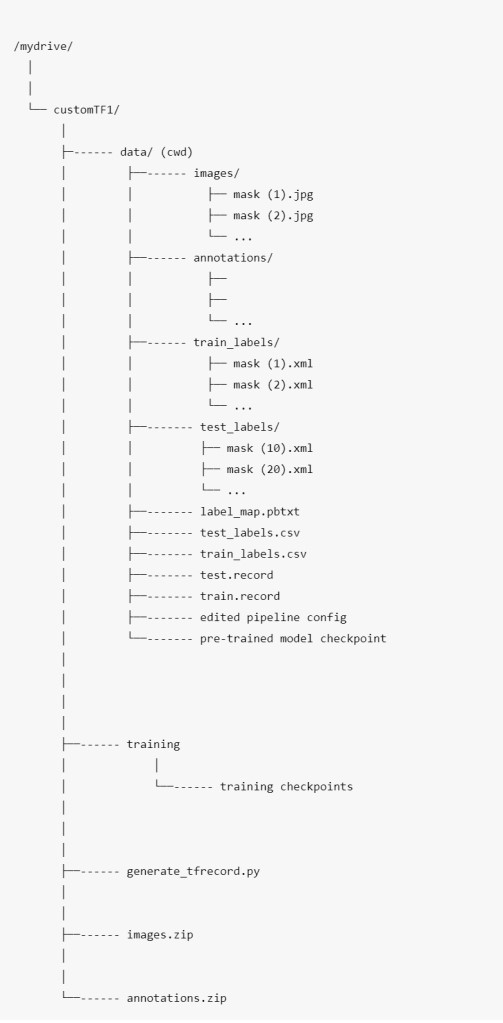

!cp /content/models/research/object_detection/samples/configs/ssd_mobilenet_v2_coco.config /mydrive/customTF1/data/The workspace at this point:

There are many data augmentation options that you can add. Check the full list here. For beginners, the above changes are sufficient.

Data Augmentation Suggestions (optional)

First, you should train the model using the sample config file with the above basic changes and see how well it does. If you are overfitting, then you might want to do some more image augmentations.

In the sample config file: random_horizontal_flip & ssd_random_crop are added by default. You could try adding the following as well:

(Note: Each image augmentation will increase the training time drastically)

- from train_config {}:

data_augmentation_options {

random_adjust_contrast {

}

}

data_augmentation_options {

random_rgb_to_gray {

}

}

data_augmentation_options {

random_vertical_flip {

}

}

data_augmentation_options {

random_rotation90 {

}

}

data_augmentation_options {

random_patch_gaussian {

}

}

2. In model {} > ssd {} > box_predictor {}: set use_dropout to true This will help you to counter overfitting.

3. In eval_config: {} set the number of testing images you have in num_examples and remove max_eval to evaluate indefinitely

eval_config: {

num_examples: 274 # set this to the number of test images we divided earlier

num_visualizations: 20 # the number of visualization to see in tensorboard

}

15) Load Tensorboard

%load_ext tensorboard

%tensorboard --logdir '/content/gdrive/MyDrive/customTF1/training'16) Train the model

Navigate to the object_detection folder in Colab VM

%cd /content/models/research/object_detectionTraining & evaluation using model_main.py

Run the following command from the object_detection directory:

!python model_main.py --pipeline_config_path=/mydrive/customTF1/data/ssd_mobilenet_v2_coco.config --model_dir=/mydrive/customTF1/training --num_train_steps=200000 --sample_1_of_n_eval_examples=1 --alsologtostderrwhere pipeline_config_path points to the pipeline config file and model_dir points to the directory in which training checkpoints and events will be written. Note that this binary will interleave both training and evaluation. The num_train_steps is the number of steps you want your model to train for. The default is 200000 in the mobilenet_ssd_v2_coco.config file. You can either set a different value here in the command or just change it in the config file.

NOTE :

For best results, you should stop the training when the loss is less than 1 if possible, else train the model until the loss does not show any significant change for a while. You can reduce the number of steps to 50000 and check if the loss goes below 1. If not, then you can retrain the model with a higher number of steps.

The loss will vary for different models. MobileNet-SSD starts with a loss of about 15 to 20 and should be trained until the loss is consistently under 1. Ideally, we want the loss to be as low as possible but we should be careful so that the model is not over-fitting. A loss between 0.5 and 1 seems to give good results.

The output after running the above command will normally look like it has “frozen”, but DO NOT rush to cancel the process. The training outputs logs only every 100 steps by default, therefore if you wait for a while, you should see a log for the loss at step 100. The time you should wait can vary greatly, depending on whether you are using a GPU and the chosen value for batch_size in the config file, so be patient.

RETRAINING THE MODEL ( in case you get disconnected )

If you get disconnected or lose your session on Colab VM, you can start your training where you left off as the checkpoint is saved on your drive inside the training folder. To restart the training simply run steps 1, 2, 6, 7, 8, 15, and 16.

Note that since we have all the files required for training like the record files, our edited pipeline config file, the label_map.pbtxt file, and the model checkpoint folder, therefore we do not need to create these again.

The training automatically restarts from the last trained checkpoint itself.

However, if you see that it doesn’t restart training from the last checkpoint you can make 1 change in the pipeline config file. Change fine_tune_checkpoint to where your latest trained checkpoints have been written and have it point to the latest checkpoint as shown below:

fine_tune_checkpoint: "/mydrive/customTF1/training/model.ckpt-xxxx" (where model.ckpt-xxxx is the latest checkpoint)

17) Test your trained model

Export inference graph

Current working directory is /content/models/research/object_detection

!python export_inference_graph.py --input_type image_tensor --pipeline_config_path /mydrive/customTF1/data/ssd_mobilenet_v2_coco.config --trained_checkpoint_prefix /mydrive/customTF1/training/model.ckpt-141649 --output_directory /mydrive/customTF1/data/inference_graphTest your trained Object Detection model on images

Current working directory is /content/models/research/object_detection

This step is optional.

# Different font-type and font-size for labels text

!wget https://freefontsdownload.net/download/160187/arial.zip

!unzip arial.zip -d .

%cd utils/

!sed -i "s/font = ImageFont.truetype('arial.ttf', 24)/font = ImageFont.truetype('arial.ttf', 50)/" visualization_utils.py

%cd ..Test your trained model

- Make changes in lines 30 and 34. Line 30 is the number of classes and line 34 is the path to your test images folder.

- You don’t have to change the paths in lines 41 and 42 if you have created all folders and files according to this tutorial.

18) Export SSD TFlite graph

Current working directory is /content/models/research/object_detection

#Export ssd tflite graph

!python export_tflite_ssd_graph.py --pipeline_config_path /mydrive/customTF1/data/ssd_mobilenet_v2_coco.config --trained_checkpoint_prefix /mydrive/customTF1/training/model.ckpt-141649 --output_directory /mydrive/customTF1/data/tflite --add_postprocessing_op=trueChange the model.ckpt-141649 in the trained_checkpoint_prefix above to model.ckpt–xxxxxx where xxxxxx is your latest checkpoint you want to use.

The add_postprocessing_op parameter above when set to true creates the TFLite graph with 4 output values of TFLite_Detection_PostProcess for Object Detection which we will use in the next step for creating our TFLite model.

19) Convert TFlite graph to TFlite model

Check input and output tensor names

import tensorflow as tf

gf = tf.GraphDef()

m_file = open('/mydrive/customTF1/data/tflite/tflite_graph.pb','rb')

gf.ParseFromString(m_file.read())for n in gf.node:

print( n.name )Convert to TFlite (Use either method)

Convert to TFlite

NOTE: Do not run both the commands together. Both the commands create “detect.tflite” model. I’m writing the same filename for both as that is what is used in the next commands. If you run both the commands, the second one will overwrite the first one. I have commented out one of these commands.

Run the command for Floating-point first and create the TFLite model with metadata in the next step(i.e. step 20) for it. Once you have the “detect.tflite” model with the metadata you can download it. Then come back to this step and do the same for the Quantized model.

For FLOATING-POINT MODEL

#FLOAT

!tflite_convert --graph_def_file /mydrive/customTF1/data/tflite/tflite_graph.pb --output_file /mydrive/customTF1/data/tflite/detect.tflite --output_format TFLITE --input_shapes 1,300,300,3 --input_arrays normalized_input_image_tensor --output_arrays 'TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3' --inference_type FLOAT --allow_custom_opsFor QUANTIZED MODEL

#QUANTIZED_UINT8

#!tflite_convert --graph_def_file=/mydrive/customTF1/data/tflite/tflite_graph.pb --output_file=/mydrive/customTF1/data/tflite/detect.tflite --output_format=TFLITE --input_shapes=1,300,300,3 --input_arrays=normalized_input_image_tensor --output_arrays='TFLite_Detection_PostProcess','TFLite_Detection_PostProcess:1','TFLite_Detection_PostProcess:2','TFLite_Detection_PostProcess:3' --inference_type=QUANTIZED_UINT8 --default_ranges_min=0 --default_ranges_max=255 --mean_values=128 --std_dev_values=128 --change_concat_input_ranges=false --allow_custom_ops20) Create TFLite metadata

The new TensorFlow Lite samples require that the final TFLite model should have metadata attached to it in order to run. You can read more on this here on the TensorFlow site. Run the following steps to get the TFLite model with metadata.

Install tflite_support_nightly

TF1 doesn’t work correctly with tflite_support. Also, usage of the latest version of tf-nightly is recommended.

pip install tflite_support_nightlyCreate a separate folder named “tflite_with_metadata” inside the “tflite” folder to save the final TFLite model with metadata attached to it.

%cd /mydrive/customTF1/data/

%cd tflite/

!mkdir tflite_with_metadata

%cd ..Create and Upload labelmap.txt file

Create and upload “labelmap.txt” file which we will use inside Android Studio later as well. This file is different from the “label_map.pbtxt” while which we used in Steps 11 & 12. This “labelmap.txt” file only has the names of the classes written in each line and nothing more. Upload this file to /mydrive/customTF1/data folder. The labelmap.txt file looks like as shown below:

Run the code in the code block below to create the TFLite model with metadata.

(NOTE: Change the paths in lines 14, 15 & 16 & 87 in the code block below to your paths. That is only if you’re using different paths for your files. But if you’re following this tutorial you can leave them as is)

- Line 14: The input TFLite model without metadata created above in Step 20.

- Line 15 and 87: The labelmap.txt file.

- Line 16: The location for the final TFLite model output with the metadata added to it.

21) Download the new detect.tflite model with metadata, put it inside the assets folder in the object detection Android app, and run it in Android Studio

IMPORTANT: Download the TensorFlow Lite examples archive from here and unzip it. (You can also switch to a previous version on GitHub using the Branches/Tags dropdown to download a previous version. The latest version might only have the files for Kotlin. Download any older version for the Java files that we need in this tutorial)

OR

Use this link to download the older version I have used in this tutorial from my GitHub repository.

Next, extract the files. You will find an object detection folder inside.

C:\Users\zizou\Downloads\examples-master\examples-master\lite\examples\object_detectionNext, copy the detect.tflite model with metadata and the labelmap.txt file inside the assets folder in the object detection Android app folder.

...examples-master\examples-master\liteexamples\object_detection\android\app\src\main\assetsNext, we have to make certain changes to the code as mentioned in the TensorFlow 1 GitHub link Running on mobile with TensorFlow Lite. I have shown all the changes below:

The changes to the code are as follows:

- Firstly, edit the gradle build module file. Open the “build.gradle” file $TF_EXAMPLES/lite/examples/object_detection/android/app/build.gradle to comment out the apply from: ‘download_model.gradle‘ which basically downloads the default object detection app’s TFLite model and overwrites your assets.

// apply from:'download_model.gradle'- Secondly, If your model is named

detect.tflite, and your labels filelabelmap.txt, the example will use them automatically as long as they’ve been properly copied into the base assets directory. To confirm, open up the $TF_EXAMPLES/lite/examples/object_detection/android/app/src/main/java/org/tensorflow/demo/DetectorActivity.java file in a text editor or inside Android Studio itself and find the definition of TF_OD_API_LABELS_FILE. Verify that it points to your label map file: “labelmap.txt“. Note that if your model is quantized, the flag TF_OD_API_IS_QUANTIZED is set to true, and if your model is floating point, the flag TF_OD_API_IS_QUANTIZED is set to false. This new section of DetectorActivity.java should now look as follows.

For a quantized model

private static final boolean TF_OD_API_IS_QUANTIZED = true;

private static final String TF_OD_API_MODEL_FILE = "detect.tflite";

private static final String TF_OD_API_LABELS_FILE = "labelmap.txt"; For a floating-point model

private static final boolean TF_OD_API_IS_QUANTIZED = false;

private static final String TF_OD_API_MODEL_FILE = "detect.tflite";

private static final String TF_OD_API_LABELS_FILE = "labelmap.txt";- Finally, connect a mobile device and run the app. Test your app before making any other new improvements or adding more features to it. Now that you’ve made a basic app for this, you can try to make changes to your TFLite model as I mentioned earlier in this article. I have also given the links to the TensorFlow site’s pages where you can learn how to apply other features and optimizations like post-training quantization etc. Read the links given below in the Documentation under the Credits section.

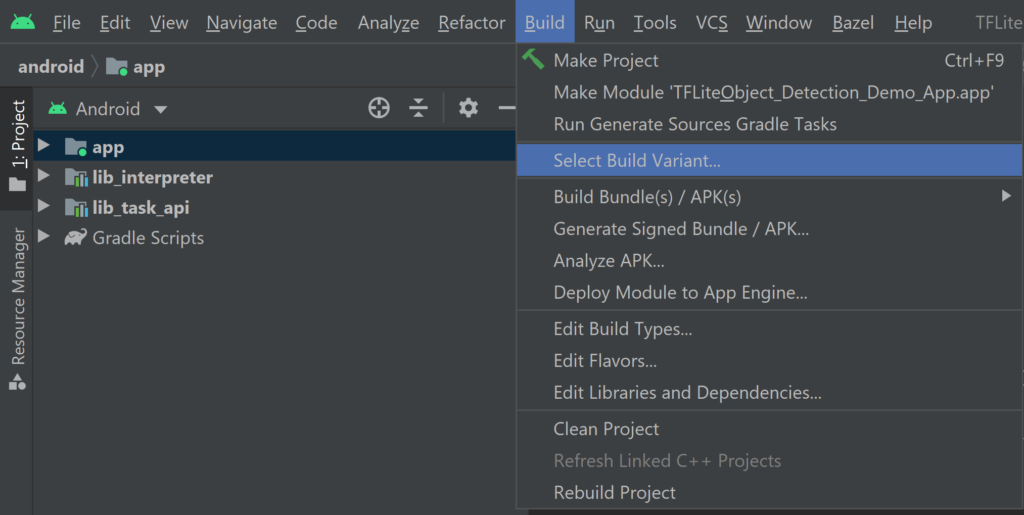

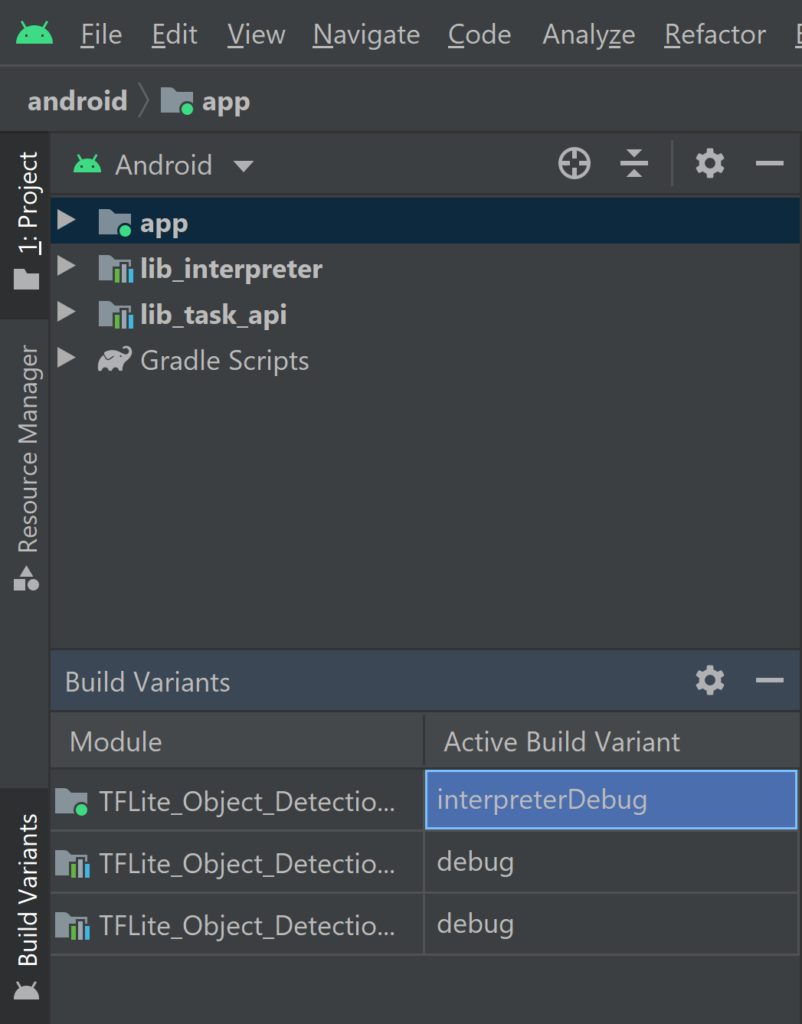

NOTE: This object detection Android reference app demonstrates two implementation solutions:

(1) lib_task_api that leverages the out-of-box API from the TensorFlow Lite Task Library;

(2) lib_interpreter that creates the custom inference pipeline using the TensorFlow Lite Interpreter Java API.

The build.gradle inside app folder shows how to change flavorDimensions "tfliteInference" to switch between the two solutions.

Inside Android Studio, you can change the build variant to whichever one you want to build and run, just go to Build -> Select Build Variant and select one from the drop-down menu. See configure product flavors in Android Studio for more details.

For beginners, you can just leave it as it is. The default implementation is lib_task_api. To learn more about these implementations read the following TensorFlow docs.

https://www.tensorflow.org/lite/inference_with_metadata/task_library/overview

https://www.tensorflow.org/lite/inference_with_metadata/lite_support )

To choose a build variant, select the app module in the project manager inside Android Studio, go to Build in the menu bar, and click on Select Build Variant. You will see a window pop up where you can choose your variant as shown below.

NOTE:

The dataset I have collected for mask detection contains mostly close-up images. For more long-shot images you can search online. There are many sites where you can download labeled and unlabeled datasets. I have given a few links at the bottom under Dataset Sources. I have also given a few links for mask datasets. Some of them have more than 10,000 images.

Though there are certain tweaks and changes we can make to our training config file or add more images to the dataset for every type of object class through augmentation, we have to be careful so that it does not cause overfitting which affects the accuracy of the model.

For beginners, you can start simply by using the config file I have uploaded on my GitHub. I have also uploaded my mask images dataset along with the PASCAL_VOC format labeled text files, which although might not be the best but will give you a good start on how to train your own custom object detector using an SSD model. You can find a labeled dataset of better quality or an unlabeled dataset and label it yourself later.

I have trained this app on a particular scenario for a person wearing or not wearing a face mask and my dataset mostly had close-up images as mentioned above. If we use this app for other scenarios it might give some false positives. You can train the model for your scenario by training on the right dataset. Moreover, if you want to exclude certain objects in your scenario, you can train those objects and then write code in your app to exclude those objects and include only the ones you want.

There are many ways you can customize these ML apps and also handle the false positives in these apps. You can find scripts for that online. This tutorial shows you how to get started with mobile ML. Have fun!

My GitHub

Files for training

Train object detection model TF 1.x

NOTE: I have uploaded the TFLite models for both TensorFlow 1 and TensorFlow 2 for test purposes. Change the name for the one you want to use in the assets folder

My Mask Dataset

https://www.kaggle.com/techzizou/labeled-mask-dataset-pascal-voc-format

My Colab notebook for this

My Youtube Video on this

CREDITS

Documentation / References

- Tensorflow Introduction

- Tensorflow Models Git Repository

- TensorFlow Object Detection API Repository

- TF Object Detection Documentation

- TF1 installation guide

- TensorFlow 1 Detection Model Zoo

- Training and Evaluation with TensorFlow 1

- TensorFlow Lite Object Detection Android Demo

- Running on mobile with TensorFlow Lite

- TensorFlow Lite Converter documentation

- TensorFlow Lite sample applications

- Tensorflow tutorials

- Object Detection for Mobile and IoT devices

- Tensorflow Hub

- TensorFlow Hub Object Detection Colab

- TensorFlow Lite metadata

- TensorFlow Lite hosted models

- TensorFlow Lite Task Library

- TensorFlow Lite Support Library

- TensorFlow Lite Task Library integrate object detectors

- TensorFlow Lite Interpreter Java API

- Object detector tutorial

Dataset Sources

You can download datasets for many objects from the sites mentioned below. These sites also contain images of many classes of objects along with their annotations/labels in multiple formats such as the YOLO_DARKNET txt files and the PASCAL_VOC xml files.

Mask Dataset Sources

More Mask Datasets

- Prasoonkottarathil Kaggle (20000 images)

- Ashishjangra27 Kaggle (12000 images )

TROUBLESHOOTING

If you get a NumPy error like “Cannot convert a symbolic Tensor (cond_2/strided_slice:0) to a numpy array”, you can fix it by downgrading your NumPy version, uninstalling and installing pycocotools. Run the 3 commands below:

!pip install numpy==1.19.5

!pip uninstall pycocotools

!pip install pycocotools